June 22, 2017

by Dmitri Zimine

In this blog, we show how to scale out a Docker Swarm Cluster based on container workload, so that you don’t over-provision your AWS cluster and pay for just enough instances to run your containers. Learn how we achieved this, watch the 2 min video to see it in action. Read the blog for details. Grab our code recipe and adjust it to your liking and use to auto-scale your Swarm on AWS.

Capacity planning is a lost battle. With uneven, unpredictable workloads, one just can’t get it right: either over-provision and pay for extra capacity, or under-provision and make users suffer with degraded services. Pick how you lose your money.

Orchestrators such as Docker Swarm and Kubernetes are here to solve the capacity problem. They let you scale the services easily and effectively, creating container instances on demand and scheduling them on the underlying cluster.

Scaling a service is really easy with Docker Swarm: one command, or one API call

“`docker service scale redis=4

“`

But what if you run the cluster on AWS, or other public cloud? You have to provision a cluster of ec2 instances and do capacity planning again. Which is a lost battle. Amazon auto-scaling groups is little help, they lose their “auto” magic in case of orchestrators: Elastic Load Balancer is typically irrelevant for Kubernetes and Swarm; and the standard utilization-metric based scaling via CloudWatch alerts becomes inefficient and even dangerous [ 1 ].

Wouldn’t it be great if the cluster can scale based on the container load? If an orchestrator automagically adjusted the size of the cluster by adding more nodes to handle more load,so that all containers have a node to run and there are no idle nodes? Wouldn’t it be great to not have to pay for idle capacity? Absolutely! Kubernetes does exactly this natively on AWS and Azure via auto-scaler.

Docker Swarm? no such luck. There’s nothing like the k8b auto-scaler out of the box. But don’t rush to throw away your Docker Swarm. We can help you make it auto-scale. It’s easy with StackStorm, and we will walk you thru on “how exactly” in the rest of this blog.

The idea is simple: When the cluster fills up on load, create extra worker nodes and add them to the cluster. Swarm will take it from there and distribute the load on workers. An IFTTT-like rule: If a cluster is full, then add worker nodes.

The right task for StackStorm. Just specify a few things precise enough for implementation:

1) How to define a “cluster filled up” event? A scaling trigger is defined as “too many pending tasks”, or, more strictly, “a count of pending tasks is going above a threshold”. A trigger is emitted by StackStorm “swarm.pending_queue” sensor that polls the Swarm for unscheduled tasks and fires when the count goes above threshold (scale-out) or below threshold (scale-in).

2) How to make the swarm cluster tell us that it is “filled up”? If not given a hint, a swarm scheduler thinks the cluster is bottomless and never stops shoveling the containers on the workers. Swarm CPU shares don’t help: they are modeled after VMware and act exactly as shares; the scheduler still shedule everything, just keep the share-based ratio. Solution? Use memory reservations, and watch “pending tasks” count. Memory is not over-provisioned by default; the scheduler will schedule only the tasks the workers can fit, the rest will be “pending tasks”, waiting for resources (memory) to become available. This will trigger swarm.pending_queue described above.

3) How to create and add worker nodes? I chose to use Auto Scaling Groups as a tried and trusted way to scale on AWS, but drop the “auto-” and trigger scaling events from StackStorm. The launch configuration uses a custom AMI with Docker daemon installed and set up, so that new worker instances come up fast. A new node auto-joins the cluster via cloud-init when the instance comes up [ 2 ].

Using ASG is not the only way: you could easily provision new worker instances with st2 workflow, that offers more control over the provisioning process. Or use ASG for creating the node and set up an st2 rule to join a worker to the cluster on ASG lifecycle events. It is your choice: pick an event, set your rules, build your workflows: define a process that matches your operations.

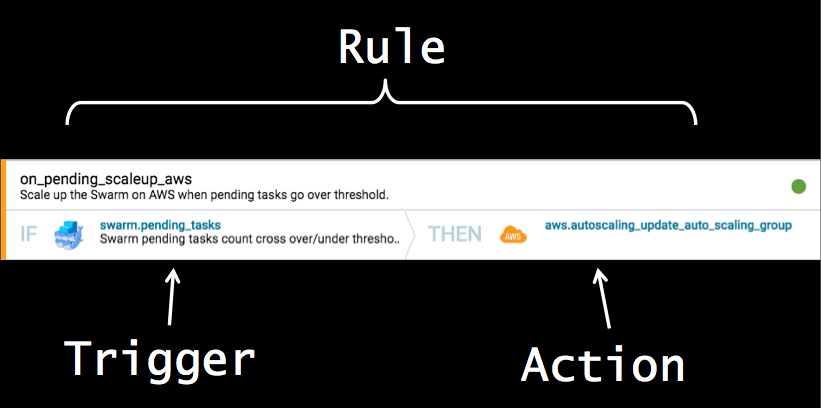

4) How to define autoscaling rule? Rule is simple and self-explanatory:

---

name: on_pending_scaleup_aws

pack: swarm

description: "Scale up the Swarm on AWS when pending tasks go over threshold."

enabled: True

trigger:

type: swarm.pending_tasks

parameters: {}

criteria:

# Crossing threshold up

trigger.over_threshold:

type: equals

pattern: True

action:

ref: aws.autoscaling_update_auto_scaling_group

parameters:

AutoScalingGroupName: swarm-workers-tf

DesiredCapacity: "{{ st2kv.system.count | int + 1 }}"

5) How to simulate a workload? Easy: just tell the swarm to scale the service! Create one with memory reservations and scale it out to to fill up the cluster.

docker service scale redis=4

Now everything is ready. Watch the video to see it all in action.

What about scale-in? I will cover it in the next post. Stay tuned. In the mean time, you can write your own, and win a prize from StackStorm.

You can do it in different ways, on different triggers, using different empirics to constitute a scale-in event. For instance, on queue count going down, wait for chill-down time, drain the node and destroy the worker – a simple 3-steps “workflow” action. Or, configure the Swarm with “binpack strategy” and run a scale-down-if-i-can workflow that checks the number of containers on the nodes and decomissions the empty nodes. Or just reduce the AWS desired capacity (if capacity is over-reduced, the scale-up rule will bring it back). With StackStorm, you define your process to fit your tools and operations.

StackStorm challenge: create your own Swarm scale-up&down solution and share with the StackStorm community. We will send you a prize. Mention @dzimine or @lhill on [Slack](munity.slack.com) or drop us a line at moc.mrotskcatsnull@ofni.

In summary: with this simple StackStorm automation, you can make your Swarm Cluster scale out on AWS with the load, taking exactly as much resources as needed. And more: once you have StackStorm deployed, you will likely find more use to event-driven automation: StackStorm is used for a wide range of automations, from auto-remediation, network automation and security orchestration to serverless, bio-computations, and IoT.

Give it a try and tell us what you think: leave the comments here or join stackstorm-community on Slack (sign up here to talk to the team and fellow automators).

`st2 pack install --base_url=https://github.com/dzimine/swarm_scaling`